ThoughtFrame

ThoughtFrame is an orchestration platform built for long-running, adaptive processes where automation, agentic AI, and human oversight work together.

The core building block is the ThoughtFrame: a self-contained unit powered by a Frame State Machine. Each ThoughtFrame enforces strict boundaries for data, users, and execution while enabling AI decision-making, synthesis, and content generation in a controlled context.

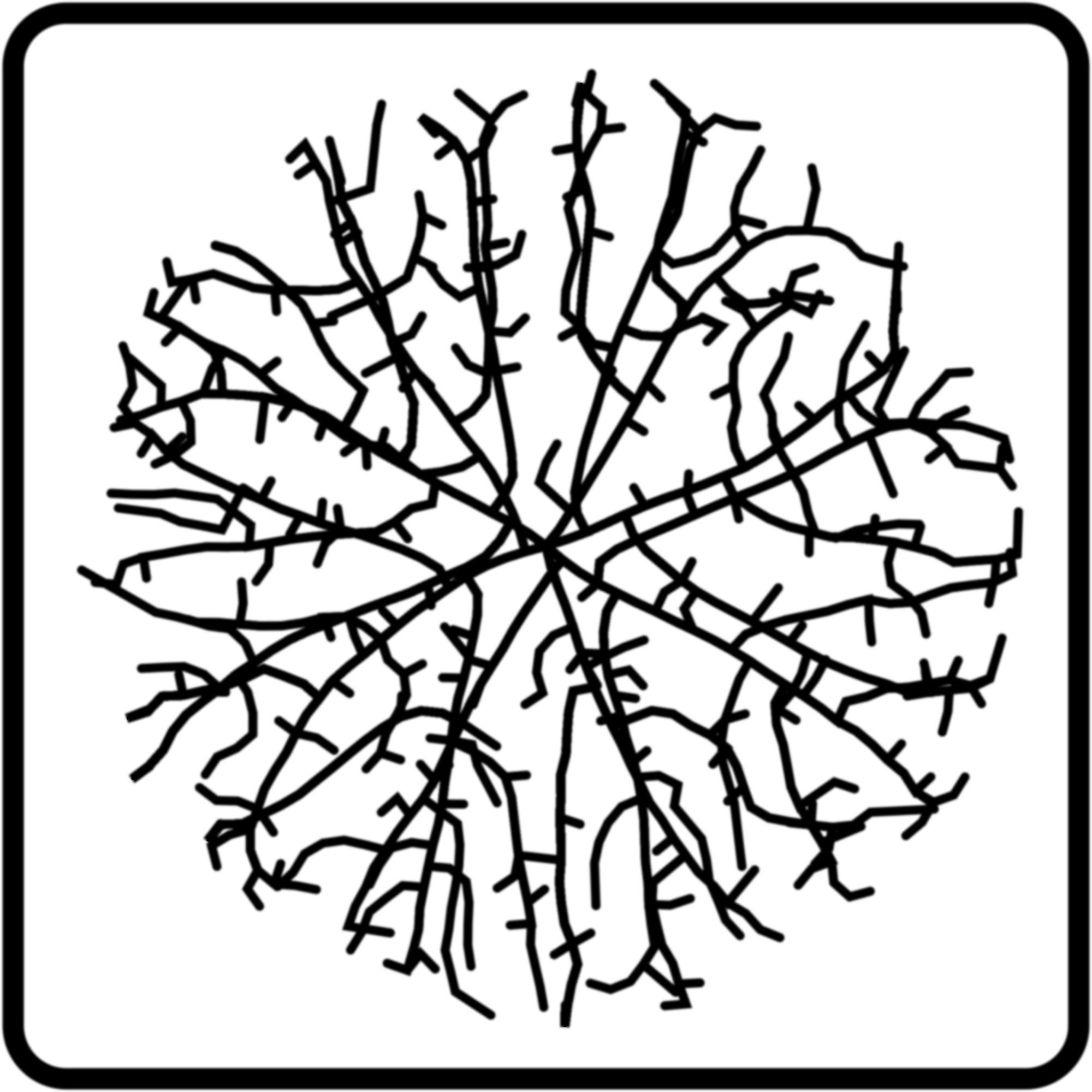

Connected ThoughtFrames form a Mesh — a living graph of orchestrated processes. Through these connections, ThoughtFrames can exchange signals, reach into one another for data, and coordinate across domains, making it possible to model complex systems as modular building blocks.

Every feature is production-grade, available live, and used internally — no demo sandboxes, no staged UIs. Platform improvements are deployed continuously; what you see is what you use.

ThoughtFrame is under active development. The platform evolves in discrete phases — engine, agentic control, deep memory, integration layers, and upcoming SDKs. You can follow the progress in the ThoughtFrame Roadmap, with open-source releases scheduled as major subsystems stabilize.

ThoughtFrame Core

ThoughtFrame Core is the runtime engine for all Frames. It delivers a resilient foundation for orchestrating long-running, adaptive processes where automation, AI, and human oversight converge.

- Frame State Machines: Every ThoughtFrame runs on a state machine that defines its lifecycle, actions, events, and error handling.

- Mesh Graph: Frames connect into a dynamic graph, exchanging signals, sharing context, and coordinating across domains in real time.

- Interactive & Conversational: Frames are fully explorable—you can navigate them visually, query them directly, or talk to them in natural language through AI-driven interfaces.

- Event Bus & Persistence: Built-in event handling, scheduling, and durable persistence for long-running workflows and recurring tasks.

- AI Integration: Native hooks for agentic AI decision-making, synthesis, and content generation inside controlled Frame contexts.

- Function Orchestration: Deterministic execution of system actions and AI calls, chaining outputs into complex flows without hidden state.

- Isolation & Security: Clear boundaries for data, users, and analytics at the Frame level—no leakage unless explicitly bridged.

- Extensibility: Core modules and adapters can be hot-deployed, with new actions, data types, or AI providers plugged in seamlessly.

Every feature, integration, and user experience in ThoughtFrame is powered by Core.

Extended Core Capabilities

On top of its foundation, ThoughtFrame Core delivers advanced capabilities that make Frames not just secure and scalable, but interactive and AI-ready:

- Natural Language Templates: Velocity-based rendering extended with AI, allowing natural language prompts to generate or adapt live dashboards, emails, and reports.

- Interactive Frames: Frames can be explored, queried, and managed directly—either visually or through natural language conversation.

- Agentic AI Workflows: Frames can invoke AI for decision-making, synthesis, and content generation, blending automation with human oversight.

- Frame Mesh: ThoughtFrames connect into a graph, reaching into one another to share data or coordinate across processes without breaking isolation.

- Long-Running Orchestration: Built-in scheduling, event handling, and persistence keep workflows alive across hours, days, or months.

These capabilities transform Frames from isolated units into a living, conversational system for orchestration and AI collaboration.

ThoughtFrame Library

ThoughtFrame Library is the active memory layer of the platform — a living knowledge substrate where every document, dataset, and media asset becomes part of a stateful reasoning environment. Instead of retrieving content on demand, Frames interact with knowledge that is already contextually prepared for immediate use.

- Any Format, Live Context: PDFs, Office files, images, video, data tables, and code are all ingested and made addressable within the Agentic FSM. Content is not static—it remains accessible as an active context for reasoning and automation.

- Hybrid Metadata & Semantic Search: Structured metadata, tags, and permissions are fused with semantic and proximity-based retrieval—enabling precise, context-aware queries within adaptive workflows or learning environments.

- State-Linked Retrieval: Each FSM state can dynamically draw from the Library using its own scoped context, allowing Frames to “think with” prior material rather than repeatedly reprocessing it.

- Collaborative Knowledge Flows: Educators, analysts, and operators can refine and synthesize materials in real time—building study modules, process briefings, or decision guides directly from Library sources.

- Enterprise Provenance: Built on the EnterMedia core, ensuring secure versioning, permissions, audit trails, and regulatory-grade traceability.

- Unified Integration Surface: REST and WebSocket APIs expose Library and FSM context to external systems such as LMSs, data platforms, or cloud storage (S3, Google Drive, etc.).

AI, RAG & FSM Convergence

- Contextual Reasoning: AI agents and FSM transitions can access the Library as an extension of working memory—summarizing, reasoning, or generating new materials directly from existing context.

- Secure & Deterministic: Each query runs within a controlled Frame scope—auditable, replayable, and consistent across sessions. Nothing is transient or lost between runs.

- Generative Enrichment: Frames can synthesize derivative artifacts—flashcards, outlines, checklists, or code—from verified Library content, ensuring every generation remains grounded and reproducible.

ThoughtFrame Library transforms stored data into a persistent reasoning substrate—accelerating retrieval, improving accuracy, and turning every document into a live participant in adaptive workflows.

ThoughtFrame LMS

ThoughtFrame LMS is a production-grade learning management system already deployed across professional training, certification, and higher education. It delivers the core elements of structured learning—Pods, Modules, Activities, and a centralized Content Library—combined with full-featured assessment and grading.

- Pods: The central grouping of learners, instructors, and content. Each Pod is stateful, with its own members, history, and analytics.

- Modules & Activities: Reusable Modules organize learning units, and Activities (assignments, quizzes, discussions, projects) bring them to life inside Pods.

- Question Banks: Robust repositories of questions in every format—multiple choice, short answer, essay, coding, file upload—supporting large-scale test prep and certification programs.

- Grading & Feedback: Automated scoring, instructor review, rubrics, and detailed feedback workflows provide fairness and transparency across all assessment types.

- Content Library: Backed by the EnterMedia DAM, the Library powers courses with managed documents, media, and datasets—ensuring versioning, permissions, and reuse at scale.

- Proven in Production: Already trusted in high-stakes environments, including fire service exam prep, professional training pipelines, and college deployments.

See what’s coming next:

View LMS Roadmap →

ThoughtFrame Code

ThoughtFrame Code is a fully integrated code orchestration, assessment, and developer workflow engine-engineered for secure, scalable, and real-world technical evaluation and learning.

- First-Class Git Integration: Every Frame can be linked directly to one or more Git repositories-supporting full clone/push/pull, commit history, branches, tags, and granular file access. No simulated sandboxes: work with real repositories and production-grade flows.

- Gradle-Native Build/Test Automation: Leverage industry-standard Gradle for compiling, testing, static analysis, and dependency management. Automate builds, code linting, and end-to-end tests per Frame, user, or submission.

- Docker Orchestration for Security: All code execution and builds run in isolated Docker containers for perfect reproducibility, security, and resource control. Fully supports custom images and environment configuration for different stacks and languages.

- Applicant Testing & Tech HR Workflows: Deliver real coding challenges, take-home projects, or live technical interviews with true Git workflows. Assignments are tracked, versioned, and auto-scored by triggers (commit, PR, or manual event).

- Frame-Scoped Collaboration: Candidate and reviewer workspaces (Frames) are isolated-enabling secure peer review, threaded feedback, inline comments, and collaborative learning for classrooms or teams.

- Pluggable Code Analysis & AI Review: Integrate custom static analysis, code similarity, test harnesses, or AI-driven code review (LLM function calls, RAG-driven code search, etc.) for each Frame.

- Actionable Analytics: Every build, commit, test, or review is tracked, timestamped, and reportable-enabling deep analytics for HR teams, instructors, and candidates.

- Flexible API & Automation: Trigger CI/CD events, auto-grade assignments, or integrate with external HR, DevOps, or LMS systems via REST/WebSocket APIs.

ThoughtFrame Mesh

ThoughtFrame Mesh is the heart of the platform — a living graph of ThoughtFrames that orchestrates long-running processes, AI-driven decisions, and human collaboration. Mesh is no longer a skunkworks project; it is the defining product.

- Graph of Frames: Each ThoughtFrame runs on a Frame State Machine, and Mesh links them into a connected system where workflows share context, pass signals, and coordinate across domains.

- Interactive & Explorable: Mesh is not static — you can explore it visually, query it, and even talk to Frames in natural language.

- AI-Native: Frames embed AI for decision-making, synthesis, and content generation, while Mesh enables multi-Frame reasoning, semantic search, and retrieval across connections.

- Rich Context Boundaries: Every Mesh segment is scoped to its Frame, ensuring privacy and isolation unless explicitly bridged.

- Open & Extensible: Developer hooks allow integration, automation, and federation across multiple Mesh graphs, with AI-assisted graph building already emerging.

- Shaped by Real Use: Mesh has grown from real-world deployments — orchestrating service workflows, AI tutors, research assistants, and more — all dogfooded internally before release.

ThoughtFrame Chain

ThoughtFrame Chain provides a unified layer for real-time blockchain data indexing, extraction, and event generation across multiple chains and protocols. It enables search, analytics, and AI to operate on meaningful, structured on-chain data-serving as the foundation for advanced monitoring, alerting, and automation tools.

- Cross-Chain Indexing: Aggregates and normalizes transactions, events, and protocol data from Ethereum, Bitcoin, Tron, and other networks. Structured storage allows instant querying and replay.

- Semantic Event Extraction: Parses raw blockchain activity into high-level events-transfers, swaps, position changes, liquidations, payments-enriched with metadata and ready for downstream consumption.

- Search & Analytics Ready: All indexed data is accessible for advanced search, AI-driven analysis, reporting, or custom workflow triggers. Enables ad hoc queries on user accounts, tokens, protocols, and address histories.

- Event Bus & Trigger System: Every extracted event can emit signals to connected applications-supporting real-time notifications, dashboards, or external automation.

- Foundation for Monitoring & Alerting: Built to be the core analytics substrate for whale tracking, wallet monitoring, payment detection, and compliance systems. You define the business logic; Chain provides the data, events, and search.

- Open & Extensible: Add new chains, protocol decoders, and custom event types with minimal friction-extensible API for integration with any analysis or alerting stack.

ThoughtFrame Chain powers data extraction, indexing, and event-driven analytics for crypto and DeFi-enabling rich search, AI, and automation across all chains and protocols.

Request a Demo / Your Own Frame

Want to see ThoughtFrame in action, or try your own private Frame with real modules and data?

Email us at info@thoughtframe.ai and we’ll get you set up.